Enhancing UX through Query and Dashboard Management on Metabase

At Jolimoi, the data assets play an important role in productivity, efficiency and visibility, that's why we decided to do a cleaning of the data visualization tool (Metabase). We divided the project into distinct phases due to the amount of assets in our organization. Transforming from a noticeable disorder to a better structured tool which will positively impact the company.

Our data visualization tool is a self-service business intelligence oriented that helps our teams to perform:

- Data exploration

- Create dashboards

- Generate alerts when data does not follow specific business defined rules

All of this will help stakeholders to speed-up and ease their decision-making and made everything much more transparent for the collaborators.

In this blog post, I will share the process and the lessons learned from the data team in this project.

Phase 1 - Interviews

This phase aims at understanding the importance of feedback from different teams using Metabase.

During the Interview Process, we interviewed our teams to understand how they interact with Metabase to identify pain points and inefficiencies in the current process.

Interview methodology

We selected key users from each team to participate in recorded interviews. The goal was collecting insights and prioritizing future improvements.

Questions

Choosing the right questions was important for the interview process, we wanted to understand users roles and how they interact with Metabase and the data in general. We defined 3 stages of questions:

- Own interaction stage where we want to understand how they process to get data in general terms we asked questions like: do you currently analyze any data? which? and how? How often? Do you have any periodicity usage on a daily, weekly, monthly basis?

- Metabase interaction stage where we ask about data visualization tools and how deep they know Metabase we asked questions like: Have you used any visualization tool before? Are you currently using Metabase? Do you know what metabase is?

- Role experience in Metabase stage to know how they incorporate it into their regular tasks and we asked questions like: Do you have some links or question IDs pinned or favorite to use questions saved that can be accessed easily? Is it easy for you to find the requests on Metabase by using the search bar?

Data collection

After conducting interviews, it was essential to prioritize improvements based on the data collected. This process aimed to address identified issues and concerns. By considering the feedback received during the interviews, we determined which improvements were most important and should be prioritized.

In this level we found some common issues like:

- 👉 Most of the users didn't feel comfortable using metadata due to the amount of keywords and names found

- 👉 Users copy data in Google Sheets for their own analysis (high usage of raw data)

- 👉 Users are willing to get more experienced in Metabase

- 👉 The most required improvement was to arrange all the questions and tags to facilitate the search experience

- 👉 Users regularly use data from Metabase on a daily basis

- 👉 Some users knew how to use filters or summarize functions. This users use this features to search and refine data presented by Metabase

- 👉 Some users bookmarked their frequently used questions in the browser

Phase 2 - Cleaning Metabase old assets

As part of immediate actions we decided to go with bulk archiving questions based on different particularities scheduled for every quarter creating dashboards to track the cleaning process.

First we needed to identify assets that were not used for a long period of time and create confusion to users.

Cleaning assets rules

Data team must ensure this process is followed correctly and provide help to any other user when facing a problem for a missing request.

- 👉 Request with no views for the past 6 months or a maximum of 10 views, including all admin users requests that satisfy the conditions

- 👉 Request that had been archived for more than 6 months

- 👉 Every admin user will be in charge of cleaning his/her own assets periodically

- 👉 Rules will not affect personal collections of any user

- 👉 Requests created by new Data Team members or that are in Technical collection on Metabase will be excluded from automatic archival (this collection is used to stored some technical assets like Metabase models)

- 👉 We encouraged users to archive unused requests or move regularly used requests from their personal collections to a common collection

- 👉 Unused requests from users’ personal collection can be archived by the data team if it deems these requests not useful, without informing the user

- 👉 Metabase naming convention should be followed by all users for the requests that are moved to common collections

- 👉 Data Team is free to modify the request names according to the naming convention for the requests that will be moved from personal to common collections

- 👉 There are some assets that are only reviewed once a year, for these we have a special list to exclude them from archiving

Bulk cleaning

With the amount of different assets needed to be reduced, we test the cleaning process on a smaller scale before executing it in bulk.

When we do this process some points come up, we need to be careful in all the requests that appear in different scenarios, like requests that are consulted once a year or personal collections, admin requests that are a work in progress, we ensure the bulk cleaning did not affect the current operations of all the users.

Documentation updated

We create some referential documents regarding the process and rules to give the users a better understanding of the origins of the project and how we manage bulk erasing requests to maintain visibility to users.

Follow Up

We scheduled some slack alerts for this process to ensure the process is followed correctly and simultaneously.

We also organized Metabase training for basic and intermediate level to ensure the tool is well used and the rules well interpreted.

Conclusions

In conclusion, keep our Metabase clean is important for improving the user experience.

By regularly cleaning out these "old" assets, we can ensure that users are provided with accurate and up-to-date information, a cleaner interface simplifies the user journey, without being overwhelmed by unnecessary content and increase trust in the tool's reliability.

How we measure success?

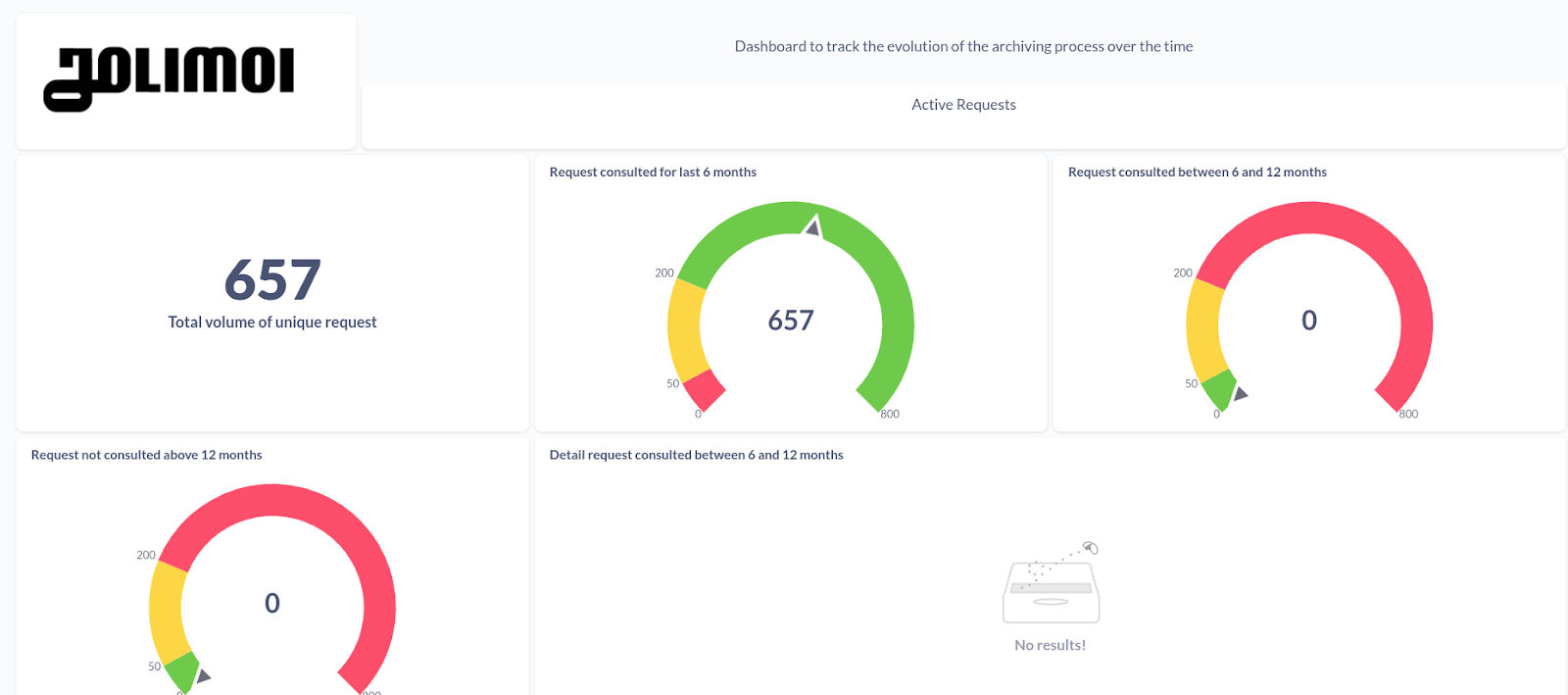

At performance level, we create a "cleaning" monitoring dashboard. We used it to quickly track some KPIs regarding assets cleaned.

This displays key metrics, including the overall volume of archived requests as well as those archived within the last six months. This will provide us with valuable insights into the performance and effectiveness of the archiving process.

Simultaneously, we are enhancing the monitoring of active requests by implementing visualizations that help to identify requests that are receiving no views, allowing us to queue them for archiving once they meet the threshold.